Lecture 32: Tabu Search#

Overview#

Unlike the Hill Climb algorithm, the Tabu Search algorithm navigates the solution space while avoiding local optima traps. To do so, the algorithm utilises a tabu list – \(s_T\), that keeps a track of recently visited solutions, and an acceptance list – \(s_A\), that stores certain desirable solutions. The algorithm initialises them as empty lists, while the current and the best solution – \(s,s^*\), are set to the initial solution – \(s_o\). Hereafter, in each iteration, the algorithm generates a set of k random candidate solutions – \(s'\), from the neighbourhood of the current solution – \(N(s)\), based on the solution transformations defined by the neighbourhood. From these neighbourhood solutions, the algorithm selects the candidate solution with the least objective function value – \(f\), that satisfies the tabu and acceptance criterion, and sets it as the current solution. In addition, if this current solution is better than the best solution, then the algorithm updates the best solution as well. After this, the Tabu Search algorithm adds the current solution to the tabu list to prevent the search from cycling back to this solution. However, to avoid the list from growing indefinitely, the algorithm may remove the oldest solution from the list, maintaining a size of a maximum of m solutions. Hence, the tabu list behaves like a short-term memory of recently visited solutions that the algorithm needs to avoid. Nonetheless, certain previously visited solutions maybe desirable due to their proximity to the best solution, and hence allowed to recycle through the iterations. To this end, the algorithm adds the current solution to the acceptance list if it is within p-proximity of the best solution. This enables the algorithm to overrule the tabu criterion when updating the current solution (refer to Line 8-9). This iterative process continues until the algorithm meets the convergence conditions pertaining to computation effort or solution quality, returning the best solution in the end.

Note, unlike the exclusively exploitative Hill Climb algorithm, the Tabu Search algorithm balances exploration and exploitation of the solution landscape. It does so by keeping a track of recently visited solutions to avoid, thus forcing domain space exploration (diversification), while storing desirable solutions to recycle, thus enabling exploitation (intensification). Nonetheless, the exploration-exploitation balance is contingent on input parameters, namely, number of candidate solutions \(k\), size of the tabu list \(m\), and proximity parameter \(p\). Thus, effective use of the Tabu Search algorithm requires sophisticated parameter fine-tuning for practical applications.

Nonetheless, due to the ability of the Tabu Search alogirhm to effectively escape local optima traps, it has been extensively deployed for optimisation in Transportation Engineering, especially in routing-based problems such as the vehicle routing problem or the dial-a-ride problem.

Pseudo Code#

Procedure \(\text{TS}(s_o, (N, k, m, p))\)

\(s ← s_o\) // initialise current solution \(s\) as the initial solution \(s_o\)

\(s^* ← s\) // initialise best solution \(s^*\) as the current solution

\(s_T ← \{\}\) // initialise tabu list \(s_T\)

\(s_A ← \{\}\) // initialise acceptance list \(s_A\)

while \(!\text{converged}\) do // repeat until converged

\(s' {R \atop ←} \{s'; s' ∈ N(s)\}_k\) // generate \(k\) random candidates from neighborhood \(N\) of the current solution

\(s' ← \text{argmin} \{f(s); s ∈ s', s ∉ s_T \cup s_A\}\) // set new solution to the best feasible candidate solution

\(s ← s'\) // update the current solution to the new solution

if \(f(s) < f(s^*)\) then // if the current solution is better than the best solution

\(s^* ← s\) // update the best solution to the current solution

end if

\(s_T ← s_T \cup \{s\}\) // add the current solution to the tabu list

if \(||s_T|| > m\) then // if size of the tabu list is greater than the maximum size \(m\)

\(\text{pop}(s_T)\) // remove the oldest solution from the tabu list

end if

if \(f(s) < (1 + p)f(s^*)\) then // if the current solution satisfies acceptance criterion \(p\)

\(s_A ← s_A \cup \{s'\}\) // add the solution to the acceptance list

end if

end while

return \(s^*\) // return the best solution

Implementation#

import random

import numpy as np

import matplotlib.pyplot as plt

def ts(s_o, N, k, m, p, n=100, t=1e-5):

"""

Tabu Search Algorithm

Parameters:

- s_o: Initial solution

- N: Neighborhood function

- k: Number of candidate neighbors per iteration

- m: Maximum size of tabu list

- p: Acceptance criterion percentage

- n: number of iterations

- t: convergence threshold

Returns:

- Best solution in each iteration

"""

s = s_o # Current solution

s_b = s # Best solution found

S = [s_b] # Track best solutions over iterations

S_t = [] # Tabu list

S_a = [] # Acceptance list

i = 1

e = float('inf')

converged = False

while not converged:

S_n = [N(s) for _ in range(k)] # Generate k random neighbors

S_n = [s_n for s_n in S_n if s_n not in S_t + S_a] # Filter tabu and accepted solutions

s_n = S_n[np.argmin([f(s_n) for s_n in S_n])] # Select best neighbour

s = s_n # Update current solution

if f(s) < f(s_b): # If better than best found so far, update

e = f(s_b) - f(s)

s_b = s

S_t.append(s) # Add current solution to tabu list

if len(S_t) > m: # Maintain tabu list size

S_t.pop(0)

if f(s) < (1 + p) * f(s_b): # Acceptance condition

S_a.append(s)

S.append(s_b) # Store the best solution at each iteration

i += 1

if i >= n or e <= t: # Convergence condition

converged = True

return S

Case Study#

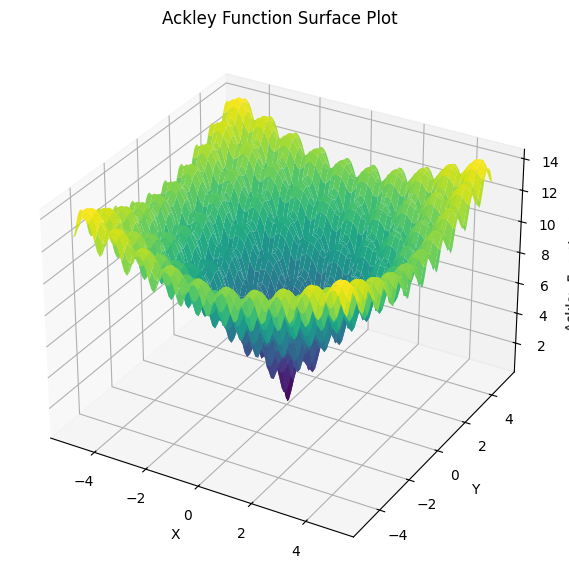

Ackley Function#

def f(s):

"""

Computes the Ackley function for given solution s.

Parameters:

- s: Tuple of input coordinates

Returns:

- Function value at s

"""

x, y = s

return -20 * np.exp(-0.2 * np.sqrt((x**2 + y**2) / 2)) + -np.exp((np.cos(2 * np.pi * x) + np.cos(2 * np.pi * y)) / 2) + 20 + np.exp(1)

# Generate a grid of (x, y) values

X, Y = np.meshgrid(np.linspace(-5, 5, 100), np.linspace(-5, 5, 100))

Z = np.array([[f(s) for s in zip(r, c)] for r, c in zip(X, Y)])

# Plot the Ackley function

fig = plt.figure(figsize=(7, 7))

ax = fig.add_subplot(111, projection='3d')

ax.plot_surface(X, Y, Z, cmap='viridis')

# Labels and title

ax.set_xlabel("X")

ax.set_ylabel("Y")

ax.set_zlabel("Ackley Function Value")

ax.set_title("Ackley Function Surface Plot")

Text(0.5, 0.92, 'Ackley Function Surface Plot')

def N(s):

x, y = s

x += np.random.uniform(-0.5, 0.5)

y += np.random.uniform(-0.5, 0.5)

s = [x,y]

return s

s_o = [random.uniform(-5, 5), random.uniform(-5, 5)]

S = ts(s_o, N, 10, 5, 0.1)

s_b = S[-1]

F = [f(s) for s in S]

# Report outcome

print("Best solution:", s_b)

print("Objective function value:", f(s_b))

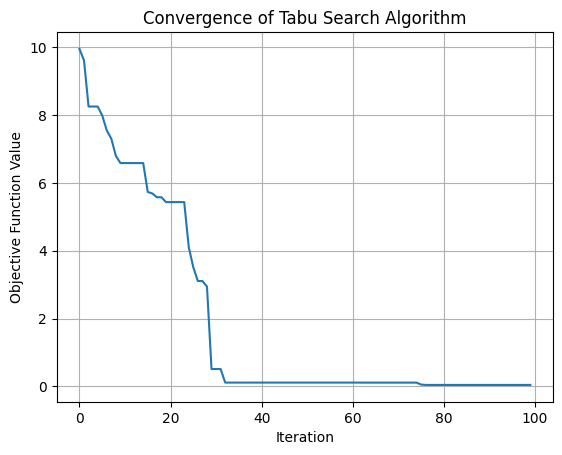

# Convergence plot

fig = plt.figure()

plt.plot(F)

plt.xlabel("Iteration")

plt.ylabel("Objective Function Value")

plt.title("Convergence of Tabu Search Algorithm")

plt.grid()

plt.show()

Best solution: [-0.007649750126353383, -0.009982769122940938]

Objective function value: 0.03977984488350872

Traveling Salesman Problem#

The Traveling Salesman Problem (TSP) is one of the most fundamental and extensively studied combinatorial optimization problems in operations research, wherein, a salesman needs to visit N cities exactly once and return to the starting city while minimizing the total travel distance. Here we have the att48 dataset, which is a well-known TSP benchmark consistsing of 48 US cities.

City |

X |

Y |

|---|---|---|

1 |

6734 |

1453 |

2 |

2233 |

10 |

3 |

5530 |

1424 |

4 |

401 |

841 |

5 |

3082 |

1644 |

6 |

7608 |

4458 |

7 |

7573 |

3716 |

8 |

7265 |

1268 |

9 |

6898 |

1885 |

10 |

1112 |

2049 |

11 |

5468 |

2606 |

12 |

5989 |

2873 |

13 |

4706 |

2674 |

14 |

4612 |

2035 |

15 |

6347 |

2683 |

16 |

6107 |

669 |

17 |

7611 |

5184 |

18 |

7462 |

3590 |

19 |

7732 |

4723 |

20 |

5900 |

3561 |

21 |

4483 |

3369 |

22 |

6101 |

1110 |

23 |

5199 |

2182 |

24 |

1633 |

2809 |

25 |

4307 |

2322 |

26 |

675 |

1006 |

27 |

7555 |

4819 |

28 |

7541 |

3981 |

29 |

3177 |

756 |

30 |

7352 |

4506 |

31 |

7545 |

2801 |

32 |

3245 |

3305 |

33 |

6426 |

3173 |

34 |

4608 |

1198 |

35 |

23 |

2216 |

36 |

7248 |

3779 |

37 |

7762 |

4595 |

38 |

7392 |

2244 |

39 |

3484 |

2829 |

40 |

6271 |

2135 |

41 |

4985 |

140 |

42 |

1916 |

1569 |

43 |

7280 |

4899 |

44 |

7509 |

3239 |

45 |

10 |

2676 |

46 |

6807 |

2993 |

47 |

5185 |

3258 |

48 |

3023 |

1942 |

import random

import matplotlib.pyplot as plt

# Nodes

C = [0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47]

X = [6734, 2233, 5530, 401, 3082, 7608, 7573, 7265, 6898, 1112, 5468, 5989, 4706, 4612, 6347, 6107, 7611, 7462, 7732, 5900, 4483, 6101, 5199, 1633, 4307, 675, 7555, 7541, 3177, 7352, 7545, 3245, 6426, 4608, 23, 7248, 7762, 7392, 3484, 6271, 4985, 1916, 7280, 7509, 10, 6807, 5185, 3023]

Y = [1453, 10, 1424, 841, 1644, 4458, 3716, 1268, 1885, 2049, 2606, 2873, 2674, 2035, 2683, 669, 5184, 3590, 4723, 3561, 3369, 1110, 2182, 2809, 2322, 1006, 4819, 3981, 756, 4506, 2801, 3305, 3173, 1198, 2216, 3779, 4595, 2244, 2829, 2135, 140, 1569, 4899, 3239, 2676, 2993, 3258, 1942]

# Arcs

A = np.zeros((48, 48))

for i in range(48):

for j in range(48):

A[i][j] = np.sqrt((X[i] - X[j])**2 + (Y[i] - Y[j])**2)

# Compute total route cost

def f(s):

c = A[s[-1]][s[0]]

for i in range(len(s)-1):

c += A[s[i]][s[i+1]]

return c

# Generate 2-opt neighborhood

def N(s):

s = s.copy()

i, j = sorted(random.sample(range(len(C)), 2))

s = s[:i] + s[i:j+1][::-1] + s[j+1:]

return s

s_o = random.sample(C, len(C))

S = ts(s_o, N, 100, 75, 0.1, 1000, 1e-5)

s_b = S[-1]

F = [f(s) for s in S]

s = s_b

x = [X[c] for c in s]

y = [Y[c] for c in s]

x.append(X[s[0]])

y.append(Y[s[0]])

# Report outcome

print("Best solution:", s_b)

print("Objective function value:", f(s_b))

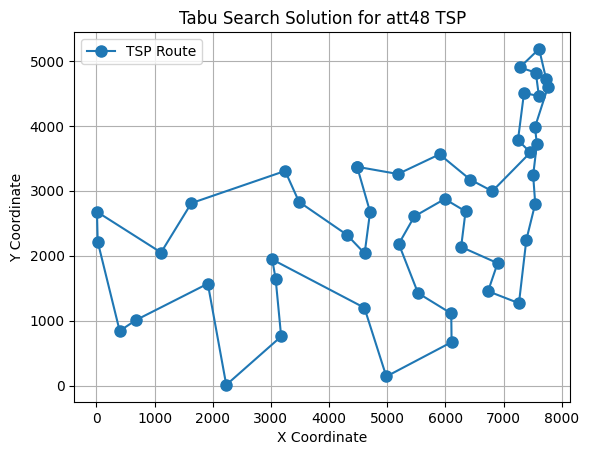

# TSP

plt.figure()

plt.plot(x, y, 'o-', markersize=8, label="TSP Route")

plt.title("Tabu Search Solution for att48 TSP")

plt.xlabel("X Coordinate")

plt.ylabel("Y Coordinate")

plt.legend()

plt.grid()

plt.show()

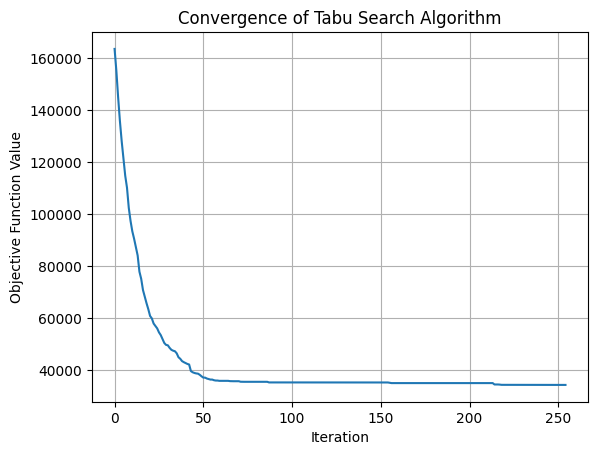

# Convergence plot

fig = plt.figure()

plt.plot(F)

plt.xlabel("Iteration")

plt.ylabel("Objective Function Value")

plt.title("Convergence of Tabu Search Algorithm")

plt.grid()

plt.show()

[41, 9, 29, 38, 16, 0, 33, 12, 31, 4, 15, 13, 36, 8, 35, 11, 32, 39, 3, 17, 14, 25, 24, 1, 37, 19, 44, 20, 21, 34, 30, 27, 2, 26, 5, 46, 7, 43, 28, 18, 22, 40, 47, 10, 23, 45, 42, 6]

Best solution: [20, 46, 19, 32, 45, 17, 35, 29, 5, 26, 42, 16, 18, 36, 27, 6, 43, 30, 37, 7, 0, 8, 39, 14, 11, 10, 22, 2, 21, 15, 40, 33, 47, 4, 28, 1, 41, 25, 3, 34, 44, 9, 23, 31, 38, 24, 13, 12]

Objective function value: 34336.43784400215